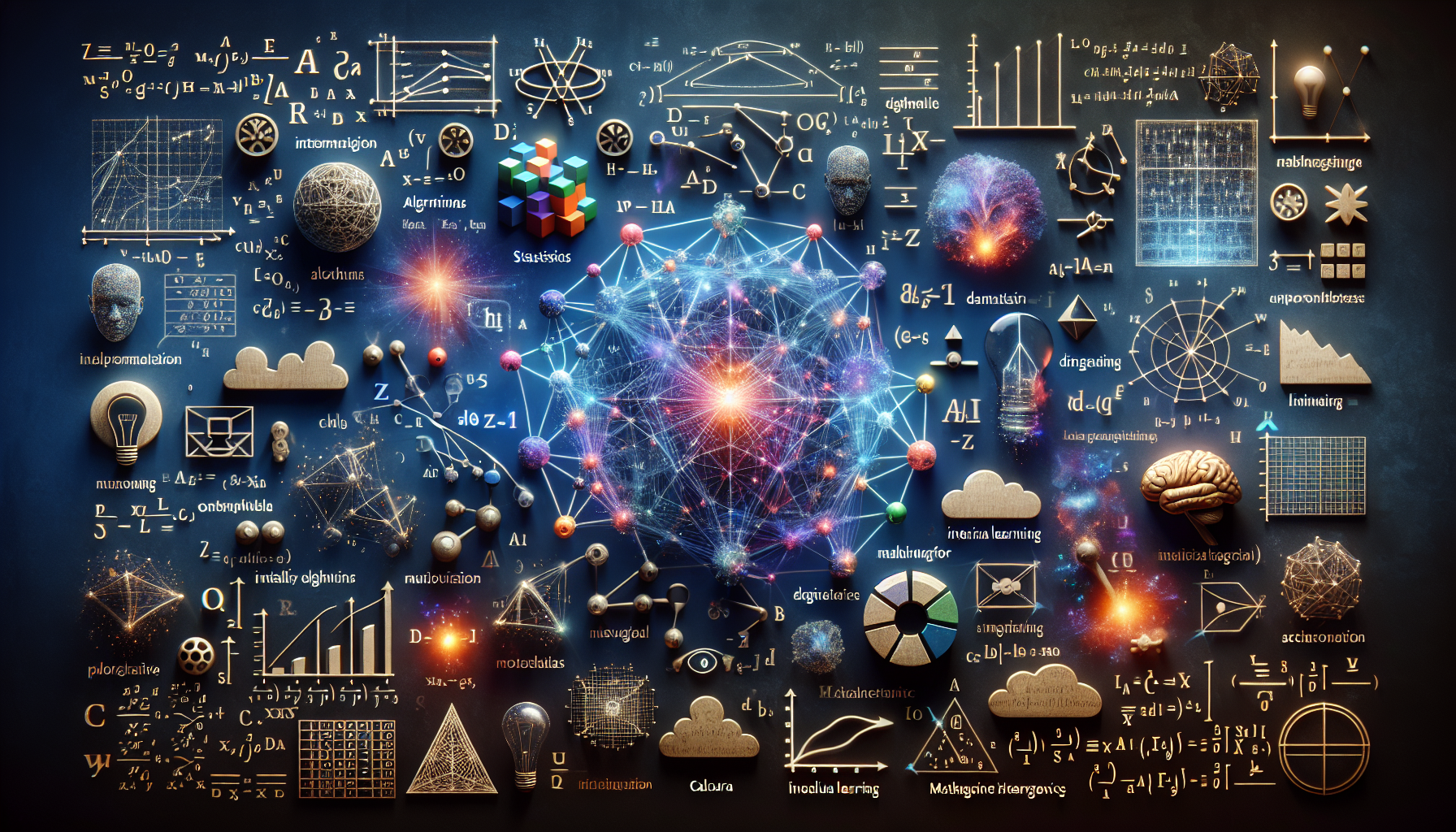

In the fascinating world of artificial intelligence, mathematical concepts serve as the backbone for its ever-evolving capabilities. From machine learning algorithms to neural networks, understanding the fundamentals of mathematics is crucial for anyone delving into the realm of AI. Whether you’re an aspiring engineer or simply fascinated by the intricacies of AI, this article provides an insightful overview of the basic math concepts that form the foundation of this extraordinary field. Embark on a journey of discovery as you explore the essential mathematical elements that power the cutting-edge advancements in artificial intelligence.

Numbers and Operations

Understanding Number Systems

In order to effectively work with numbers in AI, it is crucial to have a strong understanding of number systems. The most commonly used number system is the decimal system, also known as the base-10 system. This system uses ten digits (0-9) to represent all numbers. However, it is also important to be familiar with other number systems, such as the binary system (base-2) and the hexadecimal system (base-16), as they are frequently used in computer science and AI programming.

Basic Arithmetic Operations

Having a solid understanding of basic arithmetic operations is essential for performing calculations in AI. These operations include addition, subtraction, multiplication, and division. Addition and subtraction involve combining or separating numbers, respectively. Multiplication is the process of repeated addition, while division is the process of distributing a quantity equally into parts. These operations serve as the foundation for more complex mathematical algorithms and calculations utilized in AI.

Properties of Numbers

Understanding the properties of numbers is essential for working with mathematical concepts in AI. Some important properties include the commutative property, associative property, and distributive property. The commutative property states that the order in which numbers are added or multiplied does not affect the result. The associative property states that the grouping of numbers being added or multiplied does not affect the result. The distributive property allows for the expansion of expressions involving addition and multiplication.

Order of Operations

In order to correctly evaluate mathematical expressions, it is important to understand and follow the order of operations. This order, often remembered through the acronym PEMDAS (Parentheses, Exponents, Multiplication and Division from left to right, Addition and Subtraction from left to right), provides a set of rules for performing operations in a specific order. Adhering to the order of operations ensures that calculations are done accurately and consistently. It is a fundamental concept that helps maintain clarity and precision in AI programming and mathematical computations.

Algebra

Variables and Constants

Variables and constants play a fundamental role in algebra, and they are crucial in AI programming. Variables represent unknown quantities or values that can change, while constants are fixed values. Variables are often represented by letters, and they can be used to create algebraic expressions and equations. Understanding the relationship between variables and constants is important for solving problems involving unknowns, modeling real-world situations, and manipulating mathematical equations in AI applications.

Expressions and Equations

Algebraic expressions are combinations of variables, constants, and mathematical operations, such as addition, subtraction, multiplication, and division. These expressions allow us to represent relationships and perform calculations. Equations, on the other hand, are expressions that contain an equals sign, representing a balance or equality between two sides of the equation. Solving equations involves finding the value of the variable that satisfies the equation. Both expressions and equations are essential components in AI algorithms that involve mathematical modeling and problem-solving.

Solving Linear Equations

Linear equations, which involve variables raised to the first power only, are one of the most basic and important types of equations in algebra. Solving linear equations requires isolating the variable on one side of the equation by performing inverse operations. These inverse operations can include addition/subtraction, multiplication/division, and distributing/moving terms. Mastery of solving linear equations enables AI programmers to analyze data, make predictions, and create mathematical models that aid in decision-making and problem-solving.

Polynomials and Factoring

Polynomials are algebraic expressions that consist of variables raised to various powers, multiplied by coefficients. They are used to represent a wide range of mathematical concepts and relationships in AI. Factoring polynomials involves breaking them down into simpler, multiplied expressions, which allows for easier analysis and manipulation. Factoring is a crucial technique in AI, as it aids in simplifying complex equations, identifying patterns, and solving higher-order polynomial equations.

Quadratic Equations

Quadratic equations are a specific type of polynomial equation that includes a variable raised to the second power. They play a significant role in AI and mathematical modeling due to their ability to represent parabolic relationships. Solving quadratic equations involves methods such as factoring, completing the square, and using the quadratic formula. Understanding quadratic equations is critical for analyzing real-world data, optimizing algorithms, and predicting outcomes in AI applications.

Geometry

Lines, Angles, and Triangles

Geometry is a branch of mathematics that deals with the properties and relationships of shapes and forms. Lines, angles, and triangles are fundamental elements in geometry that are widely used in AI applications. Lines are straight paths that extend indefinitely in both directions. Angles are formed by the intersection of two lines or line segments and are measured in degrees. Triangles are polygons with three sides and three angles. Understanding these geometric concepts allows AI programmers to work with spatial data, image processing, and computer vision algorithms.

Circles and Arcs

Circles and arcs are circular shapes that are prevalent in geometry and AI applications. Circles consist of all points in a plane that are equidistant from a center point. They have unique properties, including the radius (distance from the center to any point on the circle) and the diameter (twice the length of the radius). Arcs are portions of circles, defined by two endpoints and the points on the circle connecting them. Circles and arcs are essential in AI for tasks like mapping, navigation, and analyzing natural language processing.

Polygons and Quadrilaterals

Polygons are closed shapes with straight sides, and quadrilaterals are specific types of polygons with four sides. Understanding their properties, including the number of sides, angles, and symmetry, is crucial for AI applications such as computer graphics, image recognition, and pattern analysis. Quadrilaterals, such as squares, rectangles, parallelograms, and trapezoids, have unique characteristics that make them suitable for representing real-world objects and structures in AI algorithms and simulations.

Similarity and Congruence

Similarity and congruence are concepts used to compare and classify geometric objects based on their properties and relationships. Similar figures have the same shape but different sizes, while congruent figures have the same shape and the same size. Understanding similarity and congruence is important in AI for tasks such as image recognition, object tracking, and pattern matching. These concepts allow AI algorithms to identify and analyze similarities or matches between objects, assisting in various applications like facial recognition and object detection.

Coordinate Geometry

Coordinate geometry combines algebraic techniques with geometric concepts to study the relationships between points, lines, and shapes on a coordinate plane. It uses a system of axes and numerical coordinates to represent geometric objects. Coordinate geometry is essential in AI for tasks involving spatial data analysis, graphing, and computer vision. It allows AI programmers to define and manipulate geometric objects accurately, perform calculations on spatial data, and create mathematical models that represent real-world phenomena.

Functions and Graphs

Introduction to Functions

Functions are mathematical relationships between inputs (domain) and outputs (range). They are widely used in AI for tasks such as machine learning, data analysis, and modeling complex systems. Functions can be represented using algebraic expressions, tables, or graphs. Understanding the concept of functions is critical in AI, as it enables programmers to create algorithms that transform inputs into meaningful outputs, learn patterns from data, and make predictions based on mathematical models.

Types of Functions

There are various types of functions used in AI, each serving different purposes and exhibiting unique characteristics. Some common types include linear functions, quadratic functions, exponential functions, logarithmic functions, and trigonometric functions. Each function type has specific properties, graphs, and applications in AI. Linear functions, for example, represent a straight line relationship, while exponential functions describe exponential growth or decay. Knowledge of different types of functions empowers AI programmers to choose appropriate mathematical models for different scenarios and to develop algorithms tailored to specific tasks.

Graphing Functions

Graphing functions visually represents the relationship between inputs and outputs. It helps in understanding and analyzing the behavior of functions. Graphs can display various features, such as slope, intercepts, symmetry, and asymptotes. They provide valuable insights into the patterns, trends, and properties of functions. Graphing functions is crucial in AI, as it supports visualization, data interpretation, and pattern recognition. By plotting functions, AI programmers can gain a deeper understanding of mathematical relationships and develop algorithms for tasks like data visualization, regression analysis, and machine learning.

Transformations of Functions

Transformations of functions involve modifying the shape, position, or size of a function’s graph by applying various operations. Common transformations include translations, reflections, dilations, and compressions. Understanding these transformations is essential in AI for tasks such as image processing, signal analysis, and data manipulation. Transforming functions allows AI algorithms to modify or enhance data, extract features, and perform operations on signals or images, contributing to tasks such as image recognition, speech processing, and data augmentation.

Inverse Functions

Inverse functions are pairs of functions that undo each other’s operations. They are used to reverse the effect of a function or to find the original value given the output. Inverse functions are crucial in AI for tasks such as data encoding, encryption, and decryption. They allow AI algorithms to reverse operations, retrieve hidden information, or reconstruct original data from encoded or encrypted forms. Understanding inverse functions enables AI programmers to develop algorithms that ensure data security, privacy, and integrity.

Statistics and Probability

Descriptive Statistics

Descriptive statistics involve summarizing, organizing, and describing data using various statistical measures. These measures include measures of central tendency (mean, median, mode), measures of dispersion (range, variance, standard deviation), and measures of shape (skewness, kurtosis). Descriptive statistics allow AI programmers to gain insights from data, understand its distribution and characteristics, and make data-driven decisions. They are essential in AI for tasks such as data profiling, data exploration, and data visualization.

Measures of Central Tendency

Measures of central tendency provide information about the center or average value of a dataset. The most commonly used measures of central tendency are the mean, median, and mode. The mean is the arithmetic average of the data, the median is the middle value when the data is sorted, and the mode is the most frequently occurring value. Understanding measures of central tendency is crucial in AI for tasks such as data analysis, anomaly detection, and pattern recognition. They enable AI programmers to summarize and compare data, identify outliers, and detect trends or patterns in datasets.

Measures of Dispersion

Measures of dispersion quantify the spread, variability, or dispersion of data points in a dataset. They provide insights into the range of values, the spread around the mean, and the consistency of the data. Common measures of dispersion include the range, variance, and standard deviation. Understanding measures of dispersion is important in AI for tasks such as data modeling, risk analysis, and optimization. They allow AI programmers to assess the variability and stability of data, identify outliers or extreme values, and make informed decisions based on the spread of the data.

Probability Theory

Probability theory is the mathematical framework for analyzing and quantifying uncertainty and randomness. It deals with the likelihood or chance of events occurring. Understanding probability theory is crucial in AI for tasks such as machine learning, decision making, and data analysis. Probability theory allows AI algorithms to model uncertainty, assess risks, make predictions, and estimate probabilities based on data or prior knowledge. It is fundamental for tasks such as natural language processing, pattern recognition, and predictive modeling.

Statistical Distributions

Statistical distributions represent the patterns or frequencies of possible outcomes of a random process or event. They provide a mathematical description of the probabilities associated with different values or ranges of values. Understanding different statistical distributions is important in AI for tasks such as data modeling, hypothesis testing, and simulation. Statistical distributions enable AI programmers to generate synthetic data, assess the likelihood of events, and make predictions based on the known distribution of data. They are used in various AI algorithms, including Bayesian networks, Monte Carlo simulations, and probabilistic graphical models.

Calculus

Limits and Continuity

Limits and continuity are fundamental concepts in calculus that are essential for understanding the behavior and properties of functions. Limits determine the value a function approaches as its input approaches a specific value. Continuity refers to the absence of any abrupt changes or breaks in the graph of a function. Limits and continuity allow AI programmers to analyze the behavior of functions, identify critical points, and make precise calculations involving rates of change, growth, and optimization.

Differentiation

Differentiation is a key concept in calculus that involves finding the rate of change of a function at a specific point. It provides information about the slope or gradient of a function at a given point. Understanding differentiation is crucial in AI for tasks such as optimization, machine learning, and data analysis. Differentiation enables AI programmers to analyze the behavior of functions, calculate rates of change, find extrema, and optimize algorithms based on gradient-based methods.

Applications of Differentiation

The concept of differentiation is widely applied in AI for various purposes. Some common applications include optimization algorithms, machine learning algorithms, and data analysis techniques. Differentiation allows AI programmers to optimize objective functions, estimate model parameters using gradient descent algorithms, and analyze data using regression or classification techniques. It plays a crucial role in AI algorithms such as neural networks, support vector machines, and data-driven optimization.

Integration

Integration is the reverse process of differentiation and involves finding the area under a curve or the accumulation of values. It is used to compute the total or cumulative effect of a changing quantity. Understanding integration is important in AI for tasks such as signal processing, image recognition, and data analysis. Integration enables AI programmers to analyze time series data, extract features, and identify patterns or trends. It is a fundamental concept in AI algorithms that involve filtering, feature extraction, and time series analysis.

Applications of Integration

Integration is widely used in AI for various purposes. It is employed in areas such as signal processing, computer vision, and natural language processing. Integration allows AI programmers to analyze signals or images by transforming them into frequency or spatial domains, detect patterns, and perform operations such as noise reduction, feature extraction, and enhancement. It is a key component in AI algorithms such as Fourier analysis, image processing, and language modeling.

Linear Algebra

Matrix Operations

Matrix operations play a crucial role in linear algebra and AI. Matrices are rectangular arrays of numbers, and matrix operations include addition, subtraction, multiplication, and inversion. Matrix addition and subtraction involve adding or subtracting corresponding elements of two matrices, while matrix multiplication combines rows and columns to produce a new matrix. Matrix inversion enables solving systems of linear equations. Understanding matrix operations is essential in AI for tasks such as data preprocessing, dimensionality reduction, and solving systems of equations in machine learning and optimization.

Vector Spaces

Vector spaces are sets of vectors that exhibit certain properties, such as closure under addition and scalar multiplication. Understanding vector spaces is important in linear algebra and AI, as they provide a framework for expressing and manipulating data. Vector spaces allow AI programmers to represent and analyze multidimensional data, perform operations such as linear transformations and projections, and develop algorithms for tasks such as clustering, pattern recognition, and machine learning.

Eigenvalues and Eigenvectors

Eigenvalues and eigenvectors are important concepts in linear algebra with numerous applications in AI. Eigenvalues represent scalar values that characterize the behavior of a linear transformation or a matrix. Eigenvectors are vectors associated with eigenvalues that remain in the same direction but may be scaled when multiplied by the matrix. Eigenvalues and eigenvectors allow AI programmers to analyze the behavior of systems, perform dimensionality reduction, and extract meaningful features from data. They are widely used in AI algorithms such as principal component analysis, spectral clustering, and feature extraction.

Systems of Linear Equations

Systems of linear equations are sets of equations that involve multiple variables and their relationships. Solving systems of linear equations is a common problem in AI, as it arises in various applications across different domains. Understanding systems of linear equations allows AI programmers to model real-world situations, perform data fitting, and optimize mathematical models. Solving these systems involves techniques such as matrix methods, Gaussian elimination, and the use of inverse matrices or determinants.

Linear Transformations

Linear transformations are operations that preserve vector addition and scalar multiplication properties. They are widely used in AI for tasks such as image processing, feature extraction, and data analysis. Linear transformations allow AI programmers to map or transform data from one space to another, identify patterns, and extract meaningful features. Understanding linear transformations is crucial for tasks such as image recognition, feature engineering, and data compression.

Discrete Mathematics

Sets and Set Operations

Sets and set operations are fundamental concepts in discrete mathematics. Sets are collections of distinct objects or elements, and set operations include union, intersection, complement, and the Cartesian product. Understanding sets and set operations is essential in AI for tasks such as data representation, database operations, and logic-based algorithms. Sets allow AI programmers to organize and classify data, perform operations on large datasets efficiently, and apply principles of logic and set theory in tasks like rule-based reasoning and data filtering.

Relations and Functions

Relations and functions describe the relationships between elements in sets. Relations can be represented by ordered pairs or matrices, and they can describe connections between objects, properties, or associations. Functions are specific types of relations that have unique properties, such as each input having a unique output. Understanding relations and functions is essential in AI for tasks such as data organization, data analysis, and graph-based algorithms. They allow AI programmers to represent connections between objects, perform operations on relational databases, and analyze complex networks or graphs.

Combinatorics

Combinatorics is the branch of discrete mathematics that deals with counting, arranging, and organizing objects or elements. It provides techniques and methods for solving problems related to permutations, combinations, and probability. Understanding combinatorics is important in AI for tasks such as data summarization, feature selection, and optimization algorithms. Combinatorial techniques allow AI programmers to analyze large datasets, select relevant features, and design efficient algorithms for solving optimization problems, such as the traveling salesman problem or the knapsack problem.

Graph Theory

Graph theory is the study of mathematical structures called graphs, which consist of nodes (vertices) and connections (edges) between them. Graph theory is essential in AI for tasks such as network analysis, social network analysis, and computer network optimization. Graphs allow AI programmers to represent and analyze complex relationships, model connectivity patterns, and develop algorithms for tasks such as route optimization, recommendation systems, and network clustering.

Number Theory

Number theory is the study of properties and relationships of numbers, particularly integers. It covers topics such as prime numbers, divisibility, modular arithmetic, and number patterns. Understanding number theory is important in AI for tasks such as cryptography, hashing, and optimization algorithms. Number theory concepts allow AI programmers to develop secure encryption algorithms, generate unique identifiers, or improve performance in algorithms that involve integer operations.

Boolean Algebra

Logical Operations

Boolean algebra is a branch of algebra that deals with logical variables and operations, particularly the values of true and false. Logical operations, such as conjunction (AND), disjunction (OR), negation (NOT), and implication (IF-THEN), are fundamental in Boolean algebra. Understanding logical operations is crucial in AI for tasks such as logical reasoning, rule-based systems, and decision-making algorithms. By applying logical operations, AI programmers can represent rules, make inferences, and reason about complex systems or data.

Boolean Functions

Boolean functions are mathematical functions that operate on one or more Boolean variables and produce Boolean outputs. Boolean functions are used in AI for tasks such as logic circuit design, rule-based systems, and decision trees. They allow AI programmers to express and manipulate logical relationships, design circuits or systems that perform logical operations, and create algorithms for tasks such as pattern matching, classification, and logical reasoning.

Boolean Expressions

Boolean expressions are combinations of Boolean variables, constants, and logical operations. They represent logical relationships and conditions that evaluate to either true or false. Boolean expressions are widely used in AI for tasks such as rule-based systems, filtering, and decision-making algorithms. Understanding Boolean expressions allows AI programmers to represent and manipulate logical conditions, design complex rule-based systems, and create algorithms for tasks such as data filtering, decision trees, or expert systems.

Boolean Laws

Boolean laws, also known as Boolean identities or laws of Boolean algebra, are a set of rules that describe the behavior and relationships between logical variables and operations. These laws include the commutative law, associative law, distributive law, and De Morgan’s laws. Understanding Boolean laws is essential in AI for tasks such as logic circuit design, rule-based systems, and optimization algorithms. By applying Boolean laws, AI programmers can simplify logical expressions, optimize logic circuits, and improve the performance of algorithms that involve Boolean operations.

Boolean Simplification

Boolean simplification involves simplifying complex Boolean expressions or logical circuits to their simplest form. It is an important skill in Boolean algebra and AI for tasks such as logic circuit design, logic minimization, and optimizing rule-based systems. Boolean simplification allows AI programmers to reduce the complexity of logical expressions, eliminate redundant operations or variables, and improve the efficiency of algorithms that involve logical operations.

Optimization Theory

Basics of Optimization

Optimization theory is the branch of mathematics that deals with finding the best or optimal solutions to problems. It involves maximizing or minimizing a given objective function while satisfying certain constraints. Understanding the basics of optimization is crucial in AI for tasks such as machine learning, algorithm design, and decision-making algorithms. Optimization theory allows AI programmers to develop algorithms that improve efficiency, optimize performance, and make optimal decisions based on mathematical models.

Optimization Algorithms

Optimization algorithms are procedures or methods used to find optimal solutions to problems. There are various types of optimization algorithms, including gradient-based methods, evolutionary algorithms, and metaheuristic algorithms. Understanding optimization algorithms is important in AI for tasks such as machine learning, parameter estimation, and algorithm optimization. Optimization algorithms allow AI programmers to solve complex problems, fine-tune models, and optimize performance in various applications like regression, classification, and neural network training.

Constraint Optimization

Constraint optimization deals with optimizing a function subject to a set of constraints or conditions. These constraints restrict the feasible solutions that can be considered. Constraint optimization is important in AI for tasks such as resource allocation, scheduling, and constraint satisfaction. It enables AI programmers to find feasible solutions that meet specific requirements or constraints, making it useful for tasks like scheduling, planning, and resource allocation in real-world scenarios.

Linear Programming

Linear programming is a specific type of optimization that deals with maximizing or minimizing a linear objective function subject to linear equality or inequality constraints. Linear programming is widely used in AI for tasks such as resource allocation, production planning, and optimization problems with linear relationships. It allows AI programmers to optimize processes, allocate resources efficiently, and make data-driven decisions based on mathematical models.

Nonlinear Programming

Nonlinear programming involves optimizing a function subject to nonlinear constraints or a nonlinear objective function. Nonlinear programming is important in AI for tasks such as machine learning, parameter estimation, and optimization problems with nonlinear relationships. It allows AI programmers to solve complex problems, model nonlinear phenomena, and optimize performance in various applications like regression, classification, and neural network training. Nonlinear programming methods enable the development of algorithms for solving optimization problems that involve complex or non-convex functions.

Leave a Reply